Now that you have access to sandbox and explored the search console as my first blog covers. It is time to configure the web crawler to your needs. Obviously, every instance of the website you would like to crawl has it’s own mini crawler requirements. For instance, the POC I was working with was behind a login, so, it is important that we let the crawler do it’s thing by either skipping authentication or adding some authentication parameters on the request headers. Let’s see how that can done and how we did it.

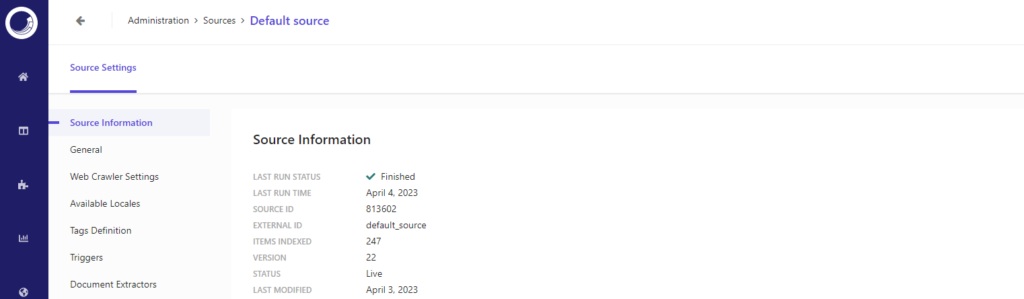

First things first, login on to search console and go to crawler settings. The settings should be located inside sources section under Administrative Tools. Once you open the source of concern, it would look like below. Do note that at least one source should already be set up by search team based on form details I talk about in my first blog here.

If you scroll down, you should see Web Crawler Settings, click on edit icon and you should now see Web Crawler settings. In our case, we did two steps here:

- Add best practice security header ‘user-agent’ with value ‘sitecorebot’ that Sitecore recommends in their documentation. Here is the link if you are curious

- Add another header to help us detect these requests on application side and skip authentication. Of course there are other ways to do it such as using special Authentication settings on Web Crawler, you can read about that process on Sitecore documentation here

Another few important things to check while you are in Source Settings:

- Ensure Trigger/s are set up according to your needs of content to be indexed. In my case, I ensured sitemap trigger is present and has right values.

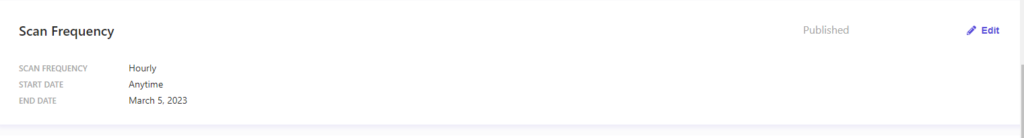

- Check that the scan frequency is according to your needs

That is it!! After these steps, the crawler was able to crawl our website which is behind login. Happy crawler which means we can get to next important part of ensuring Index has all the right data for API calls to function well. Lets get in to that part in our next blog.